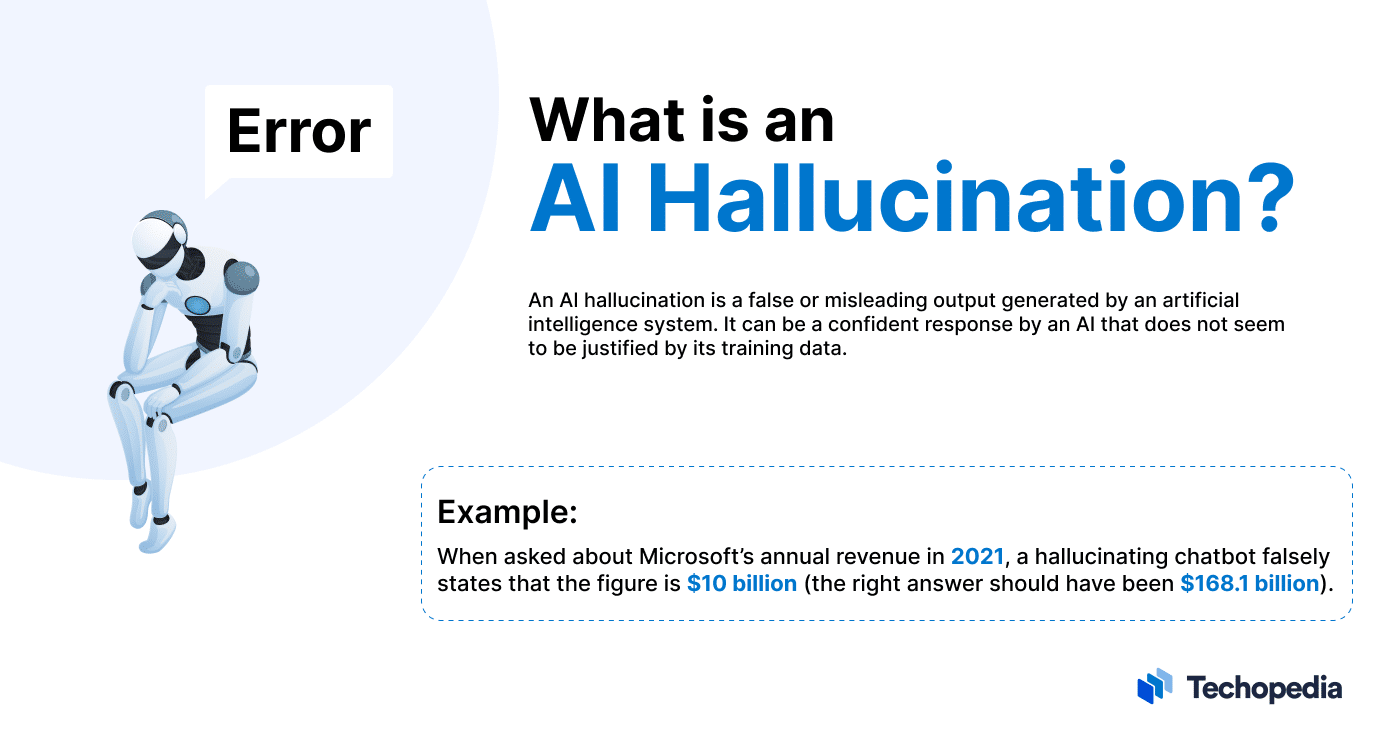

What is an AI Hallucination?

An AI hallucination is where a large language model (LLM) like OpenAI’s GPT4 or Google PaLM makes up false information or facts that aren’t based on real data or events.

Avivah Litan, VP Analyst at Gartner, explains:

Hallucinations are completely fabricated outputs from large language models. Even though they represent completely made-up facts, the LLM output presents them with confidence and authority.

Generative AI-driven chatbots can fabricate any factual information, from names, dates, and historical events to quotes or even code.

Hallucinations are common enough that OpenAI actually issues a warning to users within ChatGPT stating that “ChatGPT may produce inaccurate information about people, places, or facts.”

The challenge for users is to sort through what information is true and what isn’t.

Examples of AI Hallucinations

While there are many examples of AI hallucinations emerging all the time, one of the most notable instances occurred as part of a promotional video released by Google in February 2023. Then, its AI chatbot Bard incorrectly claimed that the James Webb Space Telescope took the first image of a planet outside of the solar system.

Likewise, in the launch demo of Microsoft Bing AI in February 2023, Bing analyzed an earnings statement from Gap, providing an incorrect summary of facts and figures.

These examples illustrate that users can’t afford to trust chatbots to generate truthful responses all of the time. However, the risks posed by AI hallucinations go well beyond spreading misinformation.

In fact, according to Vulcan Cyber’s research team, ChatGPT can generate URLs, references, and code libraries that don’t exist or even recommend potentially malicious software packages to unsuspecting users.

As a result, organizations and users experimenting with LLMs and generative AI must do their due diligence when working with these solutions and double-check the output for accuracy.

What Causes AI Hallucinations?

Some of the key factors behind AI hallucinations are:

- Outdated or low-quality training data;

- Incorrectly classified or labeled data;

- Factual errors, inconsistencies, or biases in the training data;

- Insufficient programming to interpret information correctly;

- Lack of context provided by the user;

- Struggle to infer the intent of colloquialisms, slang expressions, or sarcasm.

It’s important to write prompts in plain English with as much detail as possible. As such, it is ultimately the vendor’s responsibility to implement sufficient programming and guardrails to mitigate the potential for hallucinations.

What Are the Dangers of AI Hallucination?

One of the main dangers of AI hallucination is if the user replies too much on the accuracy of the AI system’s output.

While some individuals like Microsoft’s CEO Satya Nadella have argued that AI systems like Microsoft Copilot may be “usefully wrong,” these solutions can spread misinformation and hateful content if they’re left unchecked.

LLM-generated misinformation is challenging to address because these solutions can generate content that looks detailed, convincing, and reliable on the service but which, in reality, is incorrect, resulting in the user believing in untrue facts and information.

If users take AI-generated content at face value, then there is the potential for false and untrue information to spread across the Internet as a whole.

Lastly, there is also the risk of legal and compliance liabilities. For example, if an organization uses an LLM-powered service to communicate with customers, which issues guidance that damages the user’s property or regurgitates offensive content, then it could be at risk of legal action.

How Can You Detect AI Hallucinations?

The best way for a user to detect if an AI system is hallucinating is to manually fact-check the output provided by the solution with a third-party source. Checking facts, figures, and arguments against news sites, industry reports, studies, and books via a search engine can help to verify whether a piece of information is correct or not.

Although manual checking is a good option for users that want to detect misinformation, in an enterprise environment, it might not be logistically or economically viable to verify each segment of information.

For this reason, it is a good idea to consider using automated tools to double-check generative AI solutions for hallucination. For example, Nvidia’s open-source tool NeMo Guardrails can identify hallucinations by cross-checking the output of one LLM against another.

Similarly, Got It AI offers a solution called TruthChecker, which uses AI to detect hallucinations in GPT-3.5+ generated content.

Of course, organizations that do use automated tools like Nemo Guardrails and Got It AI to fact-check AI systems should do their due diligence in verifying how effective these solutions are at identifying misinformation and conduct a risk assessment to determine if there are any other actions that need to be taken to eliminate potential liability.

The Bottom Line

AI and LLMs may unlock some exciting capabilities for enterprises, but it’s important for users to be mindful of the risks and limitations of these technologies to get the best results.

Ultimately, AI solutions provide the most value when they’re used to augment human intelligence rather than attempting to replace it.

So long as users and enterprises recognize that LLMs have the potential to fabricate information and verify output data elsewhere, the risks of spreading or absorbing misinformation are minimized.